Have you ever read a non-technical article about public and private keys, but found it all just a bit too vague and unsatisfying? If so, this piece is for you…

Nic Carter published a good piece the other week on how Bitcoin’s security works, in the context of quantum computing. It’s not easy to explain this stuff and I thought he did a really good job.

He talks about digital signatures, and how difficult it is to impart intuition for how they work. I think this is because it’s really hard to explain how public key cryptography works. And if a reader has no mental model for the latter then they’re never going to develop intuition for the former.

How to explain the big idea behind public and private keys?

This piece might look long at first – but don’t be put off. It’s really just a super simple sequence of steps… something that a child with a basic understanding of multiplication could understand. Yet, by the end, you’ll have learned how to convince somebody you know a secret without them having any clue whatsoever what that secret is. And this trick – convincing somebody of something without showing it to them – is at the heart of public key cryptography.

So here goes…

How do you keep secrets when everything is public?

A fundamental problem that blockchain systems have is that everything happens in public… everything is potentially visible to everybody else. So how do you keep things secure? How do you prove you own a token when you want to spend it, without revealing your ‘password’ to everybody else?

This problem is directly analogous to the one faced by the Forty Thieves in the famous Arabian folk tale: how to secure their cave?

Their solution was reasonable enough at first: a secret phrase. Anybody who knew this phrase, literally a password – ‘Open Sesame!’ – merely had to shout it at the entrance and the stone securing the cave would roll away. Simple, and effective. At least for a while.

The problem, just like ours, was eavesdroppers.

The act of using a password also reveals the password to anybody nearby who might be listening.

Ali Baba was one such eavesdropper. He overheard the thieves one day and learned the password. He was then able to gain access to the cave, and to explore the riches within.

So our challenge is: can we come up with a way to prove you know a password without actually saying it out loud?

What could the thieves have done to make their system more secure?

Don’t be downhearted if you can’t figure out an answer: nobody else knew how to solve it until a few decades ago. But transactions on public blockchains remain safe only because it has been solved.

A simple number game that is surprisingly profound

To get us started with a potential solution I want you to temporarily pretend to believe something that sounds obviously untrue. I want you to imagine that nobody in the world – nobody whatsoever – knows how to factorise numbers.

Imagine you are presented with the number 6. I need you to believe that nobody could figure out that it is 2 x 3.

Similarly, somebody might give you the number 15 and you’ll have no concept that it is 3 x 5. You see 15, but which smaller numbers it may be made up of is just a complete mystery to you. You would consider it absurd even to try to figure out the answer.

Here’s a game you could play with a friend, Alice, in a world where nobody can factorise numbers.

First, pick two numbers at random, in secret. Perhaps you pick 3 and 5. Keep them to yourself for now.

Now you take your numbers – 3 and 5 – and multiply them together, getting 15.

At this point, you know 3 and 5, and you know they multiply together to get 15.

Share one of your smaller numbers – let’s say 5 – with Alice, along with the bigger number, 15.

So, you know 3, 5, 15. And Alice knows just 5 and 15.

You say to Alice:

“Here is a number: 15. One of the factors is 5. I also know the other factor. I’m going to prove that I know this to you. When this game is over, you will fully believe that I know the other factor, but you will have no idea what it is, and neither will anybody else.”

Proving you know something without revealing it

Here’s how you could prove to Alice that you really do know how to factorise 15 without ever revealing that one of the factors is 3.

The trick is that you ask Alice to think of another random number, that she will keep secret from you.

Let’s say Alice picks 13 as her secret number.

Ask her to do two things:

First she multiplies her secret number – 13 – by the number she can’t factorise – 15 – to get 195, which she also keeps to herself.

Second, she multiplies the secret number – 13 – by the factor of 15 she does know – 5 – to get 65.

So, she knows 13 x 5 (65) and she knows 13 x 15 (195). She doesn’t know the other factor of 15 (3), of course, but she is aware of something important: she knows that if 65 were multiplied by the secret factor – whatever it is – the answer will be 195.

She knows this because multiplying by a number is the same as multiplying by all its factors. For example, multiplying by 6 is the same as multiplying by 2 and then multiplying the result by 3.

So, even though she doesn’t know your other secret factor she knows that if you multiply 65 by your secret factor – whatever it is – you’re guaranteed to get 195.

To emphasise: she knows this because 195 is 13 x 15, which must also be the same as 13 x 5 multiplied by the secret factor.

But only somebody who had knowledge of that secret factor – you – could do this.

She now shares the number 65 with you, which she alone knows is 13 x 5. You, of course, have no idea how to factorise this number so you don’t know that 65 is 13 x 5. All you see is 65.

Alice now challenges you to multiply 65 by the secret factor.

So you perform the calculation:

65 x 3 = 195.

Recall that the only person who could independently perform this step of the calculation is somebody who knows the secret factor: you.

After sharing your answer – 195 – with Alice, she checks this against her own calculation (13 x 15), and sees that you have both calculated 195.

But the only number she had shared with you was 65, which only she knows is 13 x 5.

She therefore concludes that you must definitely know the other secret factor to 15, because it was only by multiplying 65 by the secret factor that you could possibly have figured out the answer to her challenge.

You have proved that you know the secret factor to her without revealing it to her.

And here’s another cool thing: the next time somebody comes along, she can pick a different random number… maybe 23. She’d calculate 23 x 15 = 345, and 23 x 5 = 115, and share the 115. The only way the next person could perform the right calculation (115 x 3 = 345) is if they knew the secret factor, 3.

An attacker who had eavesdropped on the previous conversation would have learned nothing of any use in answering the new challenge.

Isn’t that amazing? We’ve figured out a way to prove to somebody that you know a secret password without anybody, not an eavesdropper and not even the person you’re proving it to, learning what it is!

The idea underpinning this example – that if factorisation is impossible or very difficult then you can prove you know things without revealing them – sounds absurd. But it turns out there are many mathematical systems where operations akin to factorisation are indeed extremely hard. In other words, my ludicrous assumption – that factorising numbers is hard – isn’t actually all that ludicrous in the context of the mathematical systems used by modern cryptographers.

Of course, real cryptographic systems work differently to this, but not by as much as you might think… if you can assume an operation such as factorisation of numbers is difficult, you can build a system that lets you use a password without ever revealing what it is.

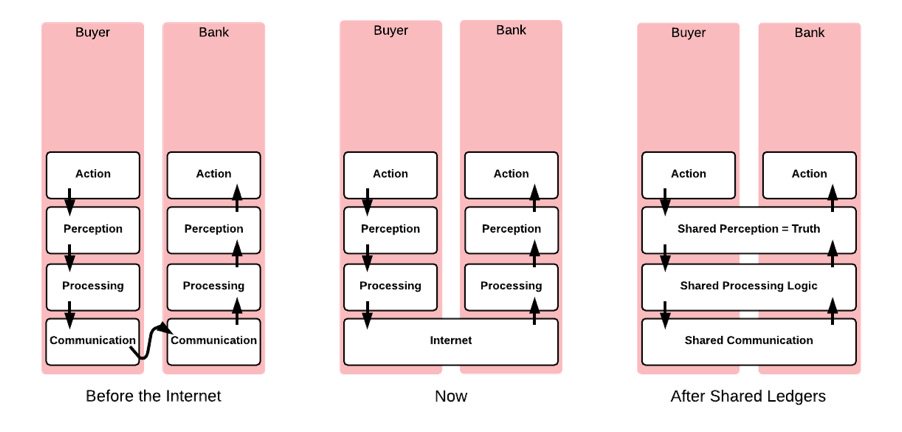

We call such systems public key cryptography systems, and they’re the basis of how systems like Bitcoin – and other blockchains – stay secure, even though everything happens completely in public.

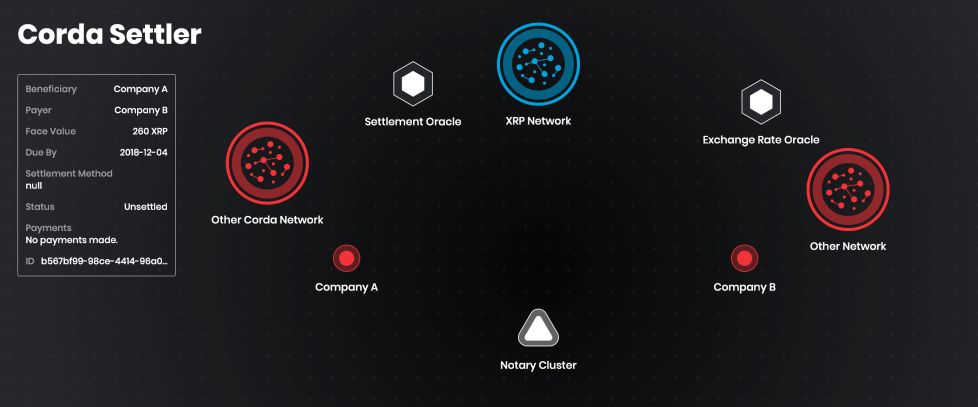

This trick — proving you know a secret without revealing it — is the heart of modern blockchain security. It’s why users can transact in full public view without ever exposing the keys that control their assets. And it’s precisely this cryptographic foundation that allows institutions, such as those I’m bringing to Solana in my role as CEO of R3 Labs, to issue and trade real-world assets on public networks with confidence.

The solution that finally solved the Forty Thieves’ problem mere decades ago now gives institutions the confidence they need to issue billions of dollars of value on public chains. What a remarkable story.